Chat with Documents using OpenAI GPT APIs (RAG use case)

If you enjoy reading this article: you will enjoy reading about how to add voice interface to ChatGpt article.

RAG: Retrieval Augmented Generation is a very common use case where we are interested in finding information from our data using chat Q&A session. RAG methods enable us to use LLM (Large Language Model) on our knowledge base. This is much cheaper than training a model from scratch or fine-tuning, and much easier to implement.

RAG use case solves a common problem that most of us deal with everyday. It is the problem of searching content from organizational knowledge using default keyword based search.

A small example like trying to understand Healthcare benefits guide. In this part, I am going to share how to Q&A on a benefits guide pdf file and see how easy it is to get answers using LLM model.

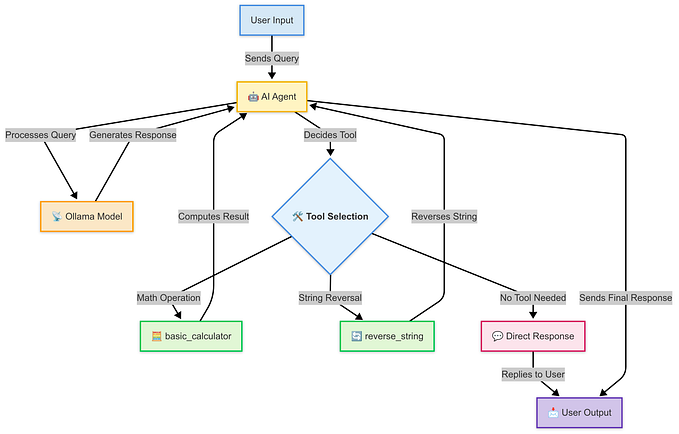

We will use OpenAI’s LLM APIs. In my research I have outlined following workflow to process the document, index it and then ask questions that can be answered using the index.

While ChatGPT can be used but, it has a limitation of amount of text that can be submitted in a single request. This limitation is because model can support upto a maxium of 4000 tokens (for simplicity, each word of text is equal to 1 token). So it is required to break down big text in to smaller chunks. Steps 1,2,3 relate to creation of an index.

Prerequisite

An OpenAI API Key is required to try this out guide on how to get an API key is here.

Process Data

Step 1: Is to break down lot of text in to smaller chunks. This is done so that we can stay within the limit of 4096 tokens when using txt-davinci-003 API.

Step 2: We create embeddings for each chunk of text by calling OpenAI’s cheaper ADA API. Embeddings are a way to represent text in number format.

embedding = openai.Embedding.create(input="Hello World", model="text-embedding-ada-002")

print(embedding["data"][0]["embedding"])

'''

Outputs

[-0.006197051145136356,

0.004149597603827715,

0.007294267416000366,

-0.02623748779296875,

-0.027276596054434776...

'''Notice that code uses “text-embedding-ada-002” whicch is the cheapest model

Step 3: Once the index is create we save it in a Index store. There are few options available for production grade systems such as Pinecone, Redis etc. To keep things simple, example below is storing it in JSON file. This was the last step in preparing data, next steps A-D will go over how to Q&A with our data.

import os

import openai

from llama_index import GPTSimpleVectorIndex, SimpleDirectoryReader, download_loader

#Replace below key with your OpenAI key

OPENAI_API_KEY = sk-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

#This example uses PDF reader, there are many options at https://llamahub.ai/

#Use SimpleDirectoryReader to read all the txt files in a folder

PDFReader = download_loader("PDFReader")

loader = PDFReader()

documents = loader.load_data(file='benefits.pdf')

#Code performs steps 1-3 in workflow

index = GPTSimpleVectorIndex(documents)

#Save the index in .JSON file for repeated use. Saves money on ADA API calls

index.save_to_disk('index.json')Chat with Documents

Step A: Load index from JSON file, next pass it an question

Step B: Based on the question, index will perform a similarity search on Index to indentify relavent chunks of text

Step C: This is similar to a ChatGPT Q&A, in this step, Question is sent along with context (relavent chunk of text) to OpenAI’s txt-davinci-003 API

Step D: OpenAI’s txt-davinci-003 API responds back with answer from the context it was provided

In below example, a PDF file containing benefits information is used for Q&A using Llama_Index Python Library which does most the heavy lifting.

#Chat with Document

#Load index saved in JSON format

index = GPTSimpleVectorIndex.load_from_disk(index_path)

#Query index, this takes care of steps A-D in workflow

print(index.query("Summarize the document?"))

'''Output

This document provides a summary of the benefits and coverage of the Premera BCBS of AK Standard Gold plan for individuals or families. It outlines the overall deductible, services covered before the deductible is met, other deductibles for specific services, the out-of-pocket limit, copayments and coinsurance costs for common medical events, and excluded services such as assisted fertility treatment, bariatric surgery, cosmetic surgery, dental care (adult), hearing aids, long-term care, private-duty nursing, routine eye care (adult), and weight loss programs. It also provides information about the network provider, mental health and substance abuse services, special health needs, and dental and eye care for children, as well as other covered services such as abortion, acupuncture, chiropractic care or other spinal manipulations, foot care, and non-emergency care when traveling outside the U.S. Finally, it outlines the rights to continue coverage and the agencies that can help with this.

'''

print(index.query('''What is the overall deductible amount for Individual

vs Family in our-of-network in table format?'''))

'''Output

Individual | Family

$4,000 | $8,000

'''

print(index.query('''What is the overall deductible amount for Individual

vs Family in our-of-network in table format?

Only answer from this content, if you dont know,

politely say I couldn't figure out.'''))

'''Output

Individual: $4,000

Family: $4,000

'''

print(index.query('''How much does childbirth cost? Only answer from this

content, if you dont know, politely say I couldn't figure out.'''))

'''Output

In this example, Peg would pay: Cost Sharing Deductibles $2,000

Copayments $10 Coinsurance $2,600 What isn't covered Limits or exclusions

$60 The Total Peg would pay is $4,670

'''

print(index.query("List out all the examples in table format? Only answer

from this content, if you dont know, politely say I couldn't figure out."))

'''Output

Peg's Baby | Joe's Diabetes | Mia's Fracture

------------------------------------------

Cost Sharing | Cost Sharing | Cost Sharing

Deductibles | Deductibles | Deductibles

$2,000 | $2,000 | $2,000

Copayments | Copayments | Copayments

$10 | $60 | $200

Coinsurance | Coinsurance | Coinsurance

$2,600 | $0 | $90

Limits or Exclusions | Limits or Exclusions | Limits or Exclusions

$60 | $20 | $0

Total Peg Would Pay | Total Joe Would Pay | Total Mia Would Pay

$4,670 | $1,520 | $2,290

'''Prompt Engineering

Prompt Engineering is how we control responses received from the model. Prompts can be used to dictate format of response, tone among many other things.

Below is an example of how we are asking model to only answer from the content also referred to as “Grounding”.

“What is the overall deductible amount for Individual vs Family in our-of-network in table format? Only answer from this content, if you dont know, politely say I couldn’t figure out.”

When dealing with Humans, it is always easy to communicate by giving an example. This is also true with AI. Prompts can have an example to show model should respond. This is also known as One Shot Learning.